Serverless Microservices with Python on Kubernetes

Reading Time: 5 Minutes

Serverless Computing is Exploding

As we move to the different models of production, distribution, and management when it comes to applications, it only makes sense that abstracting out the, behind the scenes processes should be handled by third parties, in a move towards further decentralization. And that’s exactly what serverless computing does – and startups and big companies are adopting this new way of running applications.

In this post, we will discover answers to questions: What Serverless is all about and how does this new trend affect the way people write and deploy applications?

Serverless Computing

"Serverless” denotes a special kind of software architecture in which application logic is executed in an environment without visible processes, operating systems, servers or virtual machines. It’s worth mentioning that such an environment is actually running on the top of an operating system and use physical servers or virtual machines, but the responsibility for provisioning and managing the infrastructure entirely belongs to the service provider. Therefore, a software developer can focus more on writing code.

Serverless Computing Advances the way Applications are Developed

Serverless applications will change the way we develop applications. Traditionally a lot of business rules, boundary conditions, complex integrations are built into applications and this prolongs the completion of the system as well as introduces a lot of defects and in effect, we are hard wiring the system for certain set of functional requirements. The serverless application concept moves us away from dealing with complex system requirements and evolves the application with time. It is also easy to deploy these microservices without intruding the system.

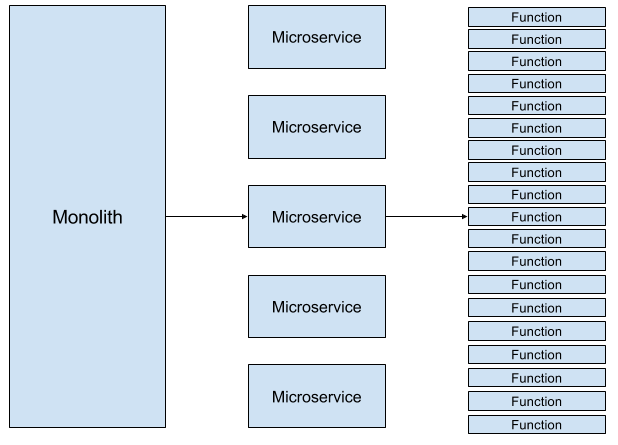

Below figure shows how the way of application development changed with time.

Monolith- A monolith application puts all its functionality into a single process and scale by replicating the monolith on multiple servers.

Microservice- A microservice architecture puts each functionality into a separate service and scale by distributing these services across servers, replicating as needed.

FaaS- Distributing Microservices further into functions which are triggered based on events.

Monolith => Microservice => FaaS

Let’s get started with the deployment of a Serverless Application on NexaStack. To create a function, you first package your code and dependencies in a deployment package. Then, you upload the deployment package on our environment to create your function.

-

Creating a Deployment Package

-

Uploading a Deployment Package

You May also Like: Building Serverless Microservices With Java

Database integration for your application

-

Install MongoDB and configure it to get started.

-

Create Database EmployeeDB

-

Create table Employee

-

Insert some records into the table for the demo.

-

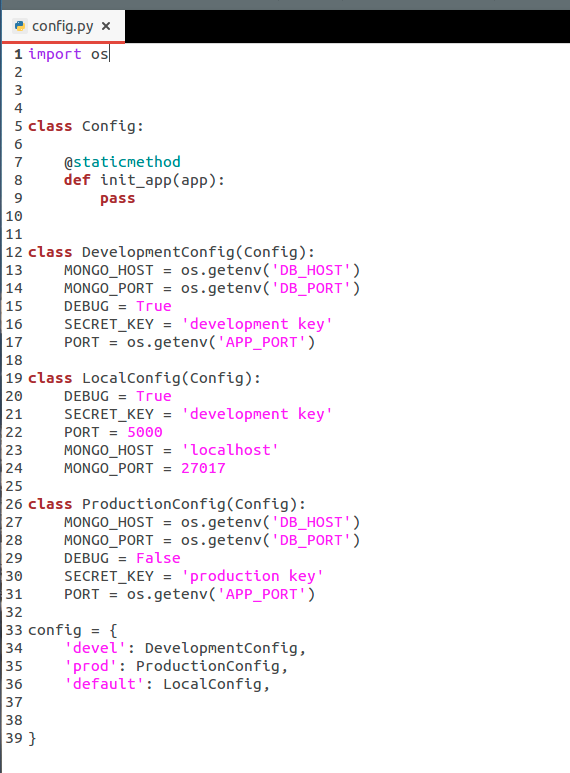

Write a file “config.py” to setup configuration on the serverless architecture as shown below.

Creating a Deployment Package – Organizing Code and Dependencies

To create a function you first create a function deployment package, a .zip file consisting of your code and any dependencies.

-

Create a directory, for example, project-dir.

-

Save all of your Python source files (the .py files) at the root level of this directory. The folder named “app” serves this purpose in the demo.

-

List any of the extra libraries used in a file “requirements.txt”

-

Zip the content of the project-dir directory, which is your deployment package.

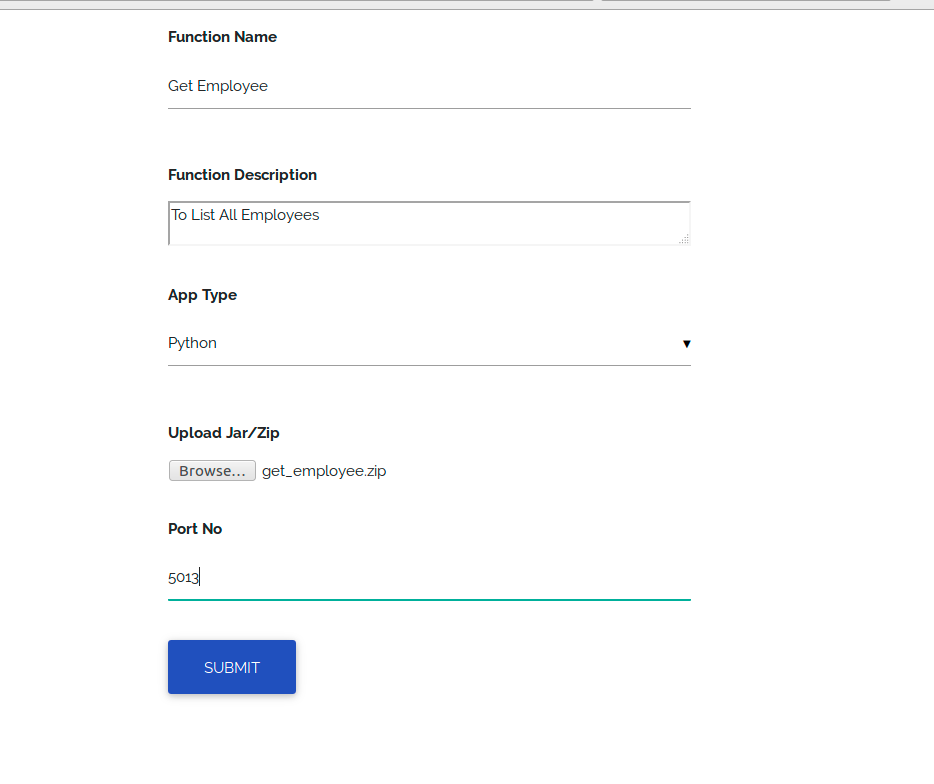

Creating function

- Fill the entries as shown in below pic to upload a function.

- The port number field must be filled with a port number on which you want your service to run.

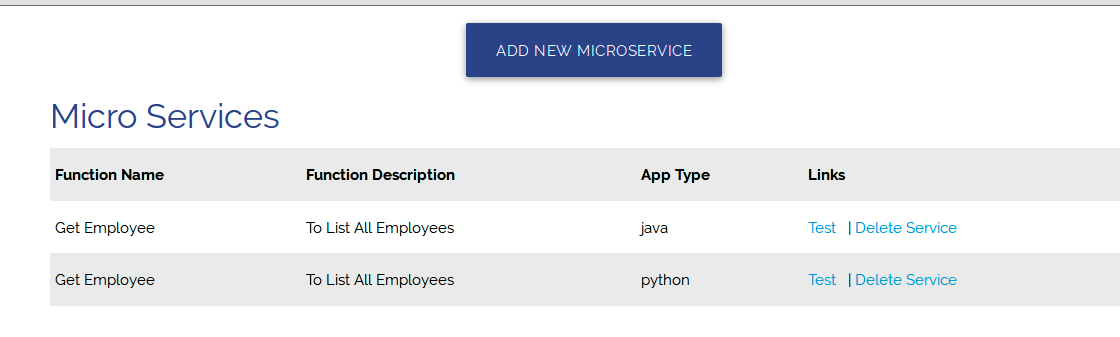

Testing

-

Once we are finished with uploading the function, Service is active to test.

-

Click on "Test" and your service is up in few seconds on the port number specified in the previous step.

Serverless Architecture: Still in development

There are some problems with the maturity of the FaaS technologies at this point. There is no organizational framework for FaaS. This means that your company may write hundreds or even thousands of these functions and after a while, nobody may know what functionality was included in what function and what functions are still used or if the code is replicated.

-

Serverless is not efficient for long-running applications. In certain cases, using long tasks can be much more expensive than, for example, running a workload on a dedicated server or virtual machine.

-

Vendor lock-in: Since the application completely depends on a third-party provider so we don’t have a full control of your application. Also, we are dependent on platform availability, and the platform’s API and costs can change.

-

Serverless (and microservice) architectures introduce additional overhead for function/microservice calls. There are no “local” operations; you cannot assume that two communicating functions are located on the same server.

-

Cold Start: A platform needs to initialize internal resources ( needs to start a container). The platform may also release such resources (such as stopping the container) if there have been no requests to your function for a long time.

One option to avoid the cold start is to make sure your function remains in an active state by sending periodic requests to your function.

-

Some FaaS implementations—such as AWS Lambda—do not provide out-of-the-box tools to test functions locally (assuming that a developer will use the same cloud for testing). This is not an ideal decision, especially considering that you will pay for each function invocation. As a result, several third-party solutions are trying to fill this gap, enabling you to test functions locally.

Summary

Serverless Framework is growing both in size itself and in the community, so jump on board and discuss business opportunities with us. KNOW MORE...

Don Offerings

Don is a leading Software Company in Product Development and Solution Provider for DevOps, Big Data Integration, Real Time Analytics & Data Science.

Product NexaStack - Unified DevOps Platform Provides monitoring of Kubernetes, Docker, OpenStack infrastructure, Big Data Infrastructure and uses advanced machine learning techniques for Log Mining and Log Analytics.

Product ElixirData - Modern Data Integration Platform Enables enterprises and Different agencies for Log Analytics and Log Mining.

Product Akira.AI is an Automated & Knowledge Drive Artificial Intelligence Platform that enables you to automate the Infrastructure to train and deploy Deep Learning Models on Public Cloud as well as On-Premises.