Real Time Big Data Integration Solutions and Data Ingestion Patterns

Reading Time: 8 Minutes

Overview

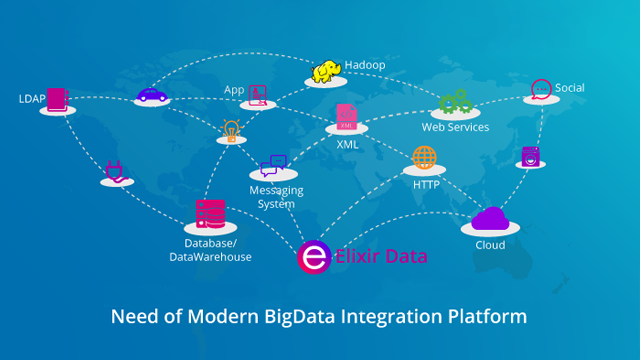

Data is everywhere, and we are generating data frCentre of Analytics - Product Discovery and Recommendationom different Sources like Social Media, Sensors, API’s, Databases.

Healthcare, Insurance, Finance, Banking, Energy, Telecom, Manufacturing, Retail, IoT, M2M are the leading domains/areas for Data Generation. The Government is using Big Data to improve their efficiency and distribution of the services to the people.

The Biggest Challenge for the Enterprises is to create the Business Value from the data coming from the existing system and new sources. Enterprises are looking for a Modern Data Integration Platform for Aggregation, Migration, Broadcast, Correlation, Data Management, and Security.

Traditional ETL is having a paradigm shift for Business Agility, and need of Modern Data Integration Platform is arising. Enterprises need Modern Data Integration Platform for agility and for an end to end operations and decision-making which involves Data Integration from different sources, Processing Batch Streaming Real Time with Big Data Management, Big Data Governance, and Security.

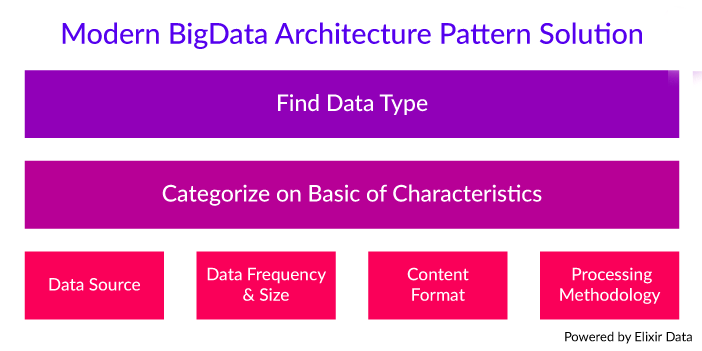

Different Types of Data -

-

What type of data it is

-

Format of content of data required

-

Whether data is transactional data, historical data or master data

-

The Speed or Frequency at which data made to be available

-

How to process the data, i.e., whether in real time or batch mode

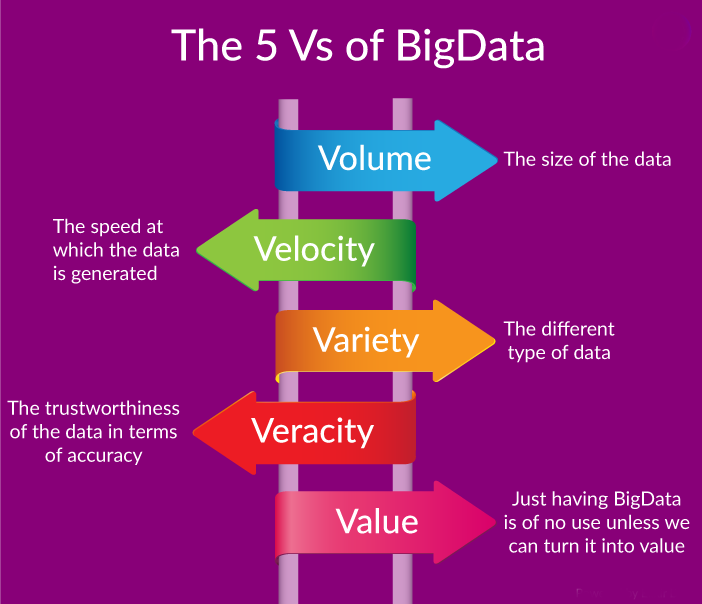

The 5 Vs of Big Data

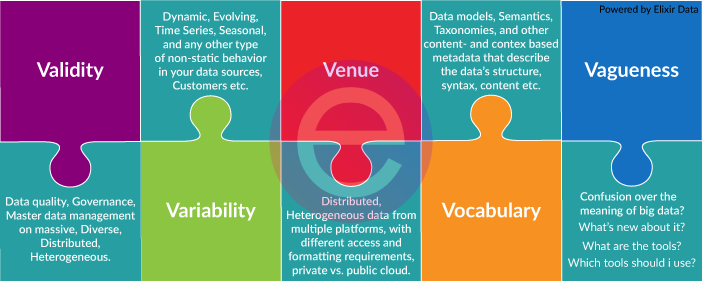

Additional 5 Vs of Big Data

What is Data Ingestion?

Data Ingestion comprises of integrating Structured/unstructured data from where it was originated into a system, where it can be stored and analyzed for making business decisions. Data Ingestion may be continuous or asynchronous, real-time or batched or both.

You May also Love to Read Ingestion and Processing of Data for Big Data and IoT Solutions

Characteristics of Big Data

Using Different Big Data types helps us to identify the Big Data Characteristics, i.e., how the Big Data is Collected, Processed, Analyzed and how we deploy that data On-Premises or Public or Hybrid Cloud.

-

Data type - Type of data

-

Transactional

-

Historical

-

Master Data and others

-

-

Data Content Format - Format of data

-

Structured (RDBMS)

-

Unstructured (audio, video, and images)

-

Semi-Structured

-

-

Data Sizes - Data size like Small, Medium, Large and Extra Large which means we can receive data having sizes in Bytes, KBs, MBs or even in GBs.

-

Data Throughput and Latency - How much information is expected and at what frequency does it arrive. Data throughput and latency depend on data sources:

-

On-demand, as with Social Media Data

-

Continuous feed, Real-Time (Weather Data, Transactional Data)

-

Time series (Time-Based Data)

-

-

Processing Methodology - The type of technique to be applied for processing data (e.g., Predictive Analytics, Ad-Hoc Query and Reporting).

-

Data Sources - Data generated Sources

-

The Web and Social Media

-

Machine-Generated

-

Human-Generated etc

-

-

Data Consumers - A list of all possible consumers of the processed data:

-

Business processes

-

Business users

-

Enterprise applications

-

Individual people in various business roles

-

Part of the process flows

-

Other data repositories or business applications

-

Big Data's Big Impact Across Industries

Data Integration Architecture

Data Integration is the process of Data Ingestion - integrating data from different sources, i.e., RDBMS, Social Media, Sensors, M2M, etc. , then using Data Mapping, Schema Definition, Data transformation to build a Data platform for analytics and further Reporting. You need to deliver the right data in the right format at the right time frame.

Big Data integration provides a unified view of data for Business Agility and Decision Making, and it involves -

-

Discovering the Data

-

Profiling the Data

-

Understanding the Data

-

Improving the Data

-

Transforming the Data

A Data Integration project usually involves the following steps -

-

Ingest Data from different sources where data resides in multiple formats.

-

Transform Data means converting data into a single format so that one can easily be able to manage his problem with that associated data records. Data Pipeline is the main component used for Integration or Transformation.

-

MetaData Management: Centralized Data Collection.

-

Store Transform Data so that analyst can exactly get when the business needs it, whether it is in batch or real time.

Why Data Integration is Important?

-

Make Data Records Centralized - As data is stored in different formats like in Tabular, Graphical, Hierarchical, Structured, Unstructured form. For making the business decision, a user has to go through all these formats before concluding. That’s why a single image is the combination of different format helpful in better decision making.

-

Format Selecting Freedom - Every user has different way or style to solve a problem. User are flexible to use data in whatever system and in whatever format they feel better.

-

Reduce Data Complexity - When data resides in different formats, so by increasing data size, complexity also increases that degrade decision making capability, and one will consume much more time in understanding how one should proceed with data.

-

Prioritize the Data - When one has a single image of all the data records, then prioritizing the data what's very much useful and what's not required for business can easily find out.

-

Better Understanding of Information - A single image of data helps non-technical user also to understand how effectively one can utilize data records. While solving any problem one can win the game only if a non-technical person can understand what he is saying.

-

Keeping Information Up to Date - As data keeps on increasing on a daily basis. So many new things come that become necessary to add on with existing data, so Data Integration makes easy to keep the information up to date.

Big Data Security and Big Data Governance

If a business wants in on the enabling world of Big Data Analytics, they will need to be aware of some of biggest security concerns first. Big Data can include using data to unused data, and its proper utilization is also necessary. Along with proper usage, Big Data security is also a major concern. Without right security and encryptions solution in place, Big Data can mean a big problem.

-

Big Data Governance

Big Data Governance means effectively managing data in your organization. As data is something that is very mean to an organization but still there are some issues involved in managing data. Those are

-

Accuracy

-

Availability

-

Usability

-

Security

-

Big Data Security

If a business wants in on the enabling world of Big Data Analytics, they will need to be aware of some of biggest security concerns first. Big Data can include using data to unused data, and its proper utilization is also necessary. Along with proper usage, Big Data security is also a major concern. Without Right Security, Authentication, encryption, Data Monitoring solution in place Big Data can be a big problem.

Internet of things, M2M and Autonomous Driving

With the rise of Internet of things, M2M Communication and Autonomous Driving Vehicles, the Data to be generated by Driverless Car Only will be around 25 gigabytes Per hour which will exceed the usage of Social Media and Data produced by mobiles.

With the massive amount of data from Data Producers, We need to solve the Problem of data integration for Batch, Streaming and Real-time Data sources. So Data integration in Internet Of Things will Play a major role in Defining the IoT Strategy.

Real-Time Big Data Integration

Data Pipeline is a Data Processing Engine that runs inside your application. It is used to transform all the incoming data in a standard format so that we can prepare it for analysis and visualization. Data Pipeline does not impose a particular structure on your data. Data Pipeline is built on Java Virtual Machine (JVM).

Need of Data Pipeline

-

Convert incoming data to a common format.

-

Prepare data for Analysis and Visualization.

-

Migrate between Databases.

-

Share Data Processing logic across Web Apps, Batch Jobs, and APIs.

-

Power your Data Ingestion and Integration tools.

Real-Time Big Data Platform

It's well said that “Making Good Decisions is a crucial skill at every level.” Big Data also involves making Real-Time Decisions. Real Time has many meanings like it express speed, execution frequency or at runtime how much time consumed. That's why real-time solutions are designed to satisfy business requirements.

Real-Time Data Integration describes real-time business intelligence and analytics. As we know, today many of technologies are evolved in Data Ingestion, Data Storage, Data Management to handle a variety of data in multiple formats that come from various sites.

When data in motion needs to travel across the solution for real-time Data Integration, each tool, and platform involved need to have some real-time capability.

Modern Big Data Solutions

You May also Love to Read Enabling Real Time Analytics For IoT

ElixirData - Full Stack Modern Big Data Integration Platform

-

ElixirData is a Modern Big Data Integration Platform that enables you to secure your Data Pipeline with Data Integrity and Data Management. ElixirData provides freedom to work in desired Data Stack and Programming Language.

-

Integrates well with NoSQL & Big Data ecosystem, traditional databases, and business tools.

-

ElixirData provides flexibility to Deploy On-Premises, Hybrid or Public Cloud. Choose the Cloud platform that suits your enterprise requirements.

-

It allows us to build Data Pipeline from different sources having different formats. Integration Pipeline comes into action with Automapper.

-

Java, NET, Python, Go used to define real-time Data Transformation pipeline. Error Handling and Data Monitoring are also supported on Pipeline.

-

ElixirData is a Modern solution to all challenges in Data Integration.

Types of Data Integration Approaches

-

Manual Integration

Here, clients reach out to all relevant information systems and manually combine selected data. Also, users need to know frameworks, data representation, and data semantics.

-

Common User Interface

Here, the user uses a standard interface that includes relevant information systems which are still separately presented so that integration of data yet has to be done by the users.

-

Integration by Applications

This approach uses applications that access various data sources and return results to the user.

Difference Between ETL and Data Integration Methods

ETL

ETL stands for Extract, Transform and Load. In ETL we extract data from different sources, structured or unstructured. Once the data is available in the Staging Area, it is all on one platform and one database. Finally, we load data into a warehouse, in the form of fact and dimension tables.

Data Integration

Data integration involves combining data from various sources, which are stored using different technologies and provide a unified view of the data.It includes multiple techniques-

-

Manual Integration

-

Physical Integration

-

Virtual Integration

How Can Don Help You?

Harness the power of Big Data to drive better business decisions from the leading Big Data Service Provider. Big Data Solutions for Startups and Enterprise -